Image by Midjourney version 5.

As a paying subscriber to OpenAI’s ChatGPT, today I got the opportunity to try the new GPT-4 model as the underlying engine for the chatbot. This was mildly interesting because I did notice changes right away. But the changes I noticed were mostly stylistic:

The old ChatGPT-3.5 had a more informal, conversational phrasing but visually used a compact style and also never posted more than a screenful at a time.

The new ChatGPT-4 favored lists of paragraphs, typically in two levels (1a, 1b, 1c, 2a, 2b…), and each answer was longer. (It may have looked longer partly because it was broken up into many shorter paragraphs, but I believe there was also more text overall.) The new style looked less chatty and more like what you would expect from an artificial intelligence, or at least a serious university student.

The new format is not a coincidence, I think. ChatGPT did have a reputation for guesstimating and sometimes “hallucinating” false facts if it ran out of real ones. (Here in Norway at least, libraries have complained that students ordered books that didn’t exist, but which they had been recommended by ChatGPT.) GPT-4 is supposed to be more logical, even more knowledgeable, and less prone to hallucination. I believe the new love for leveled lists is an attempt to come across as more professional and formal, at the cost of being less folksy.

***

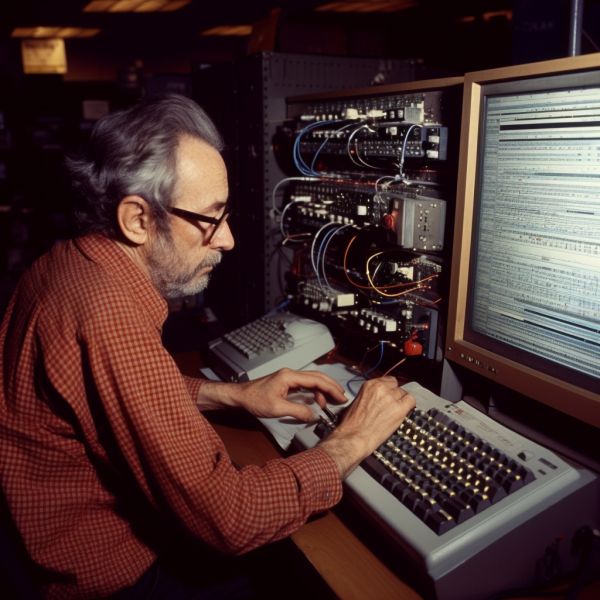

By sheer coincidence – or perhaps an invisible guiding hand – I got a message on the MidJourney server the same day: MidJourney version 5 was ready for testing for us paying subscribers. (Yes, a little payment here and a little payment there, a little payment here and there.) The new version has far more detail by default, and it has become pretty good at landscapes and cityscapes. The number and size of fingers is further improved (there are usually only five now and they don’t have extra joints) but my test of mythological creatures like fairies and mermaids will occasionally still come out with an extra arm or leg. Maybe there just aren’t that many photos of mythological creatures…

***

My inspiration for today’s picture was something I have written about a couple of times before. There is a concept called “the river of time”, and I have mentioned how in my childhood this seemed like a large quiet river running gently across the plains, but now it had turned into churning rapids and I could hear the sound of a great waterfall ahead of us. Well, I think we are almost there now, and there is no way back without razing civilization to the ground. (Which Putin seems to consider, but I don’t approve.)

This dramatic change does not come from our natural environment this time, nor from the way in which we organize our societies, although these too are affected. It is a waterfall of technology, a change so rapid that there may soon be no steering through it and we have no idea where we will come out, if we come out alive at all. In a sense, it has already begun, but it is still speeding up and we are still not in freefall, so to speak.

“This changes everything,” said Steve Jobs, the boss of Apple, when he introduced the iPhone. And the smartphone did change a lot of things and still changes more and more, although the concept of the smartphone actually already existed with the Symbian operating system for Nokia phones, and Android was released shortly after.

(Incidentally, when checking the quote on Google, it is now attributed to Professor Naomi Klein, who used it as part of her book title several years later. I actually took a course on climate change where Klein lectured while she was working on that book, and she was pretty good. But I had not expected her to become the kind of “superstar” that would edge out Steve Jobs, who was revered as an invincible superhuman during his rather short lifetime.)

***

I habitually time travel with my mind. That is, I place myself back in my body at some point in my past and look around. It is fascinating that 20 years ago, the smartphone as we know it did not exist yet. We had mobile phones aplenty here in Norway though, although the US was still lagging. I believe Japan was the only place that was ahead of Scandinavia in mobile phone use at the time. But these phones had limited capabilities and were rather expensive in use. They were still not used as cameras or music players, let alone video players.

30 years ago, in 1993, the Internet was not yet available in private homes, at least outside the USA. Universities had it on campus, but the use was somewhat limited, and there wasn’t much content that was available on the World Wide Web. There were BBSes though, electronic bulletin boards, and the UseNet was fully functional. Only we geeks used these things though. And if we wanted to buy a book, we had to do so from a brick-and-mortar bookstore, although you could occasionally find an order form in a (paper) magazine.

40 years ago, in 1983, the PC revolution was likewise just for tech geeks. At my workplace, I was the only person who took an interest in this and tried to introduce it. As a result, I briefly became involved in the introduction of this type of technology at work, when the time was ripe for it. I never sought any leading position in this work though; human ambitions are ridiculous to me. If you can’t be a weakly godlike superintelligence persisting for thousands of years, why bother.

50 years ago, in 1973, I was still in middle school. Our farm shared a landline with three other farms, and the switchboard ladies used a different combination of ring lengths for each farm so we knew which family the phone call was for. We rarely used the phone though, phone calls were expensive. Computers were huge, filling entire rooms and needing experts standing by. I had a small book about computers that predicted that within our lifetime, personal computers would be found in private homes. I may well have been the only person in our municipality to believe that, though, or even think about such things at all.

So change has always been part of my life. But the pace of change is accelerating, and now that acceleration is accelerating as well. ChatGPT was introduced on November 30, 2022, and within a week it had a million users. It has continued to cause a frenzy, with a large number of employers stating that they will use it to reduce the number of employees, and with high school teachers complaining that their students use ChatGPT to do their homework. With the new improved version 4 months later, that usage will likely move upward to colleges.

***

Nor is the acceleration limited to fun stuff like making fake photos or fake high school essays. With the assistance of AI, the Moderna vaccine against Covid-19 was made in a few days. (The rest of the roughly 9 months before it was released, during which millions died around the world, was mostly safety testing. Too bad they didn’t hold back the virus too for 9 months. Well except for New Zealand and most of the Nordic countries, we basically did that.) In the days of old, it used to take years, often closer to a decade, to make a new vaccine. Now it takes days. Days, instead of years.

Lately, artificial intelligence is not only writing software but also designing processors for computers. This was one of the defining elements of the proposed “technological singularity”, where computers make better computers, accelerating the capacity of computers rapidly beyond human levels and leaving humans in the dust, either as pampered pets or as corpses. So far though, it seems that AI is bound by the same laws of nature as we are. Simply having an AI design a new computer does not magically make it a thousand times faster – the change is incremental at best. Of course, this will change if the AI discovers completely new laws of nature in that particular field. Not holding my breath for that, though.

Still, incremental change is still change, and it goes faster and faster. What will happen when steadily better versions of ChatGPT and its future competitors become available for free or at a low cost on mobile phones all over the world? My Pixel 7 is already very good at transcribing spoken English (and probably some other major languages) and also reading out loud. Kids might grow up talking to AI more than to their parents. Even if the robots don’t revolt and replace humanity, we are still looking at a humanity that is radically different from anything we have seen before. Basically most people will be cyborgs, a symbiosis of human and computer, of natural and artificial intelligence. I would love to see what comes out on the other side of this transition. And at the current pace of change, I just might live to see it… at least if I drink less Pepsi.