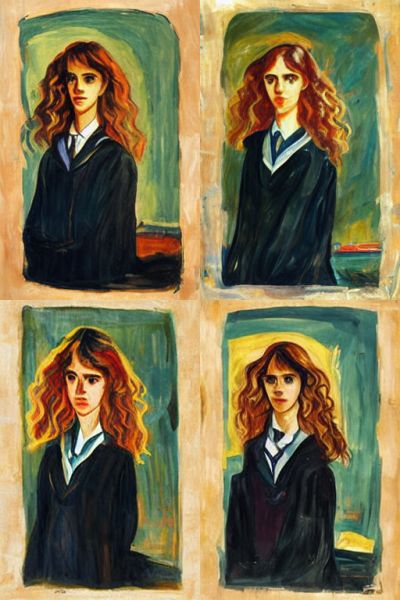

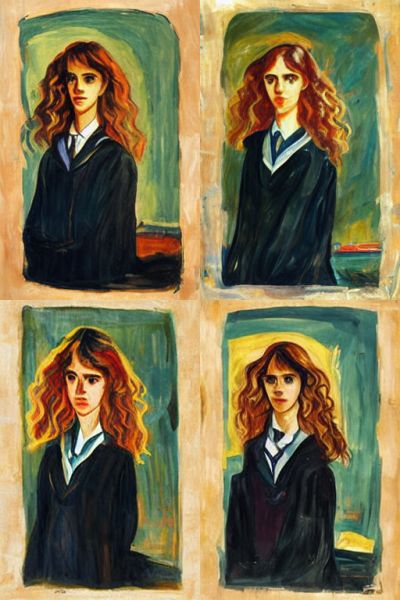

Hermione Granger (from the Harry Potter series) painted by Edvard Munch. If you think MidJourney here is plagiarizing Munch’s original, I have a very expensive bridge to sell you.

I have recently mentioned using artificial intelligence to create visual art. Text-to-image applications like DALL-E 2, MidJourney, and Stable Diffusion all use machine learning based on enormous numbers of pictures scraped from the Internet. Now some contemporary artists have discovered that some of their work is used in the underlying database used for training AI, and are upset that they have not been asked and not been compensated.

This reaction is caused by their ignorance, of course. I can’t blame them: Modern society is very complex, and human brains are limited. Yes, even mine. I could not fix a car engine if my life depended on it, for instance. I have only vague ideas of what it would take to limit toxic algae bloom. And to be honest, I could not make my own AI even if I had the money. I just happen to have a very loose idea of how they work because it interests me, because I don’t have a family to worry about, and because I don’t have a job that requires me to spend my free time thinking about it.

Anyway, I shall take it upon myself to explain why you should politely ignore the cries of the artists who feel deprived of money and acknowledgment by AI text-to-image technology.

The fundamental understanding is that learning is not theft. I hope we can agree on this. Obviously, there are exceptions to this, such as industry secrets like the recipes for Coca-Cola or the source code for Microsoft Windows. If someone learns those and uses them to create a competing product, it is considered theft of intellectual property. But if an art student studies your painting along with thousands of other paintings, and then goes on to paint their own paintings, that is not theft. If they make a painting that is a copy of yours, then yes, that is plagiarism and this infringes on your copyright. But simply learning from it along with many, many others? That is fair use, very much so. If you don’t want people to learn from you, then you need to keep your art to yourself. You can’t decide who gets to look at your art unless you keep it private.

The excitement is probably based on not knowing how the “diffusion” model of AI works. So let me see if I can popularize that. Given our everyday use of computers, it is easy to think that the AI keeps a copy of your painting in its data storage and can recall this at some later time. After all, that is what Microsoft Office does with letters, right? But machine learning is a fundamentally different process. The AI has no copy of your artwork stored in its memory, just a general idea of your style and of particular topics. This stems from how “diffusion” works.

When a program like MidJourne or Stable Diffusion gets a text prompt, it starts from a “diffuse” canvas covered in a single shade of color (or grayscale, if a black & white image is requested). It then goes through many steps of moving these pixels into shapes that fit the description it has been given. (It can do this because it has gone through the opposite process millions of times, gradually blurring the images away. Thus the name “diffusion”.) You can, if you have the patience, watch the images gradually become less and less diffuse, slowly starting to resemble the topic of the prompt. In other words, it starts with a completely diffuse image that becomes clearer and clearer. You can upscale such an image and the AI will add details that seem appropriate for the context. (Especially until recently. this could include adding extra fingers or even eyes, but the latest editions are getting better at this.)

It is worth noticing that there is also an excessively long random seed included in the process, meaning that you could give the AI the same prompt thousands and thousands of times and get different versions of the image every time. Sometimes the images will be similar, sometimes strikingly different, depending on how detailed your request is. Once an image catches your eye, you can make variants of it, and these too have a virtually unlimited number of variations.

At no point in this process does the AI bring up the original image, because there are no original images stored in its memory, just a general, diffuse idea of what the topic should look like. And in the same way, it only has a general, diffuse idea of what a particular artist’s style is. My “Munch” paintings certainly look more like Munch than Monet, but it is still unlikely that Edvard Munch would actually have painted the exact same picture. In this case, of course, it is literally impossible, and that is exactly the scenario where we want to use engines like these. “What if Picasso had painted the Sixtine Chapel? What if Michelangelo and van Gogh had cooperated on painting a portrait of Bill Gates?” The AI is simply not optimized for rote plagiarism, but for approximation. It is like a human who spent 30 years in art school practicing a little of this and a little of that, becoming a pretty good jack of all trades but a master of none. They can’t exactly recall any of the tens of thousands of pictures they have been practicing on, but they’ve got the gist of it.

***

As for today’s picture, it was made by MidJourney using the simple prompt “Hermione Granger, painted by Edvard Munch –ar 2:3” where ar stands for aspect ratio, the width compared to the height. This generated four widely different pictures, and I chose one of them and asked for variations of that. This retains the essential elements of the picture but allows for minor variations as you see above. So it is not because the AI had an original picture to plagiarize – I asked it to make variations on its own picture. With some AI engines, you can in fact upload an existing picture and modify it, but this is entirely your choice, just like if you modify a picture in Gimp or Photoshop. The usual legal limitations apply, you can not hide behind “an AI did it!”. So far, AIs are not considered persons. Maybe one day?